Amazon’s ML/AI Product Strategy: Gen AI App Dev Tools, Foundation Models, Infrastructure

From Amazon Q to PartyRock, Bedrock and SageMaker

Announcements at the AWS re:Invent event last week gave us a deeper look into Amazon’s ML and AI strategy across the tech stack: from the server infrastructure for model training and inference, to the development tools to build with LLMs and other foundation models, and the application and assistant space.

This is a deep dive into the product portfolio, value proposition and market positioning that currently drive and differentiate AWS’ ML/AI offering, with highlights from last week’s announcements.

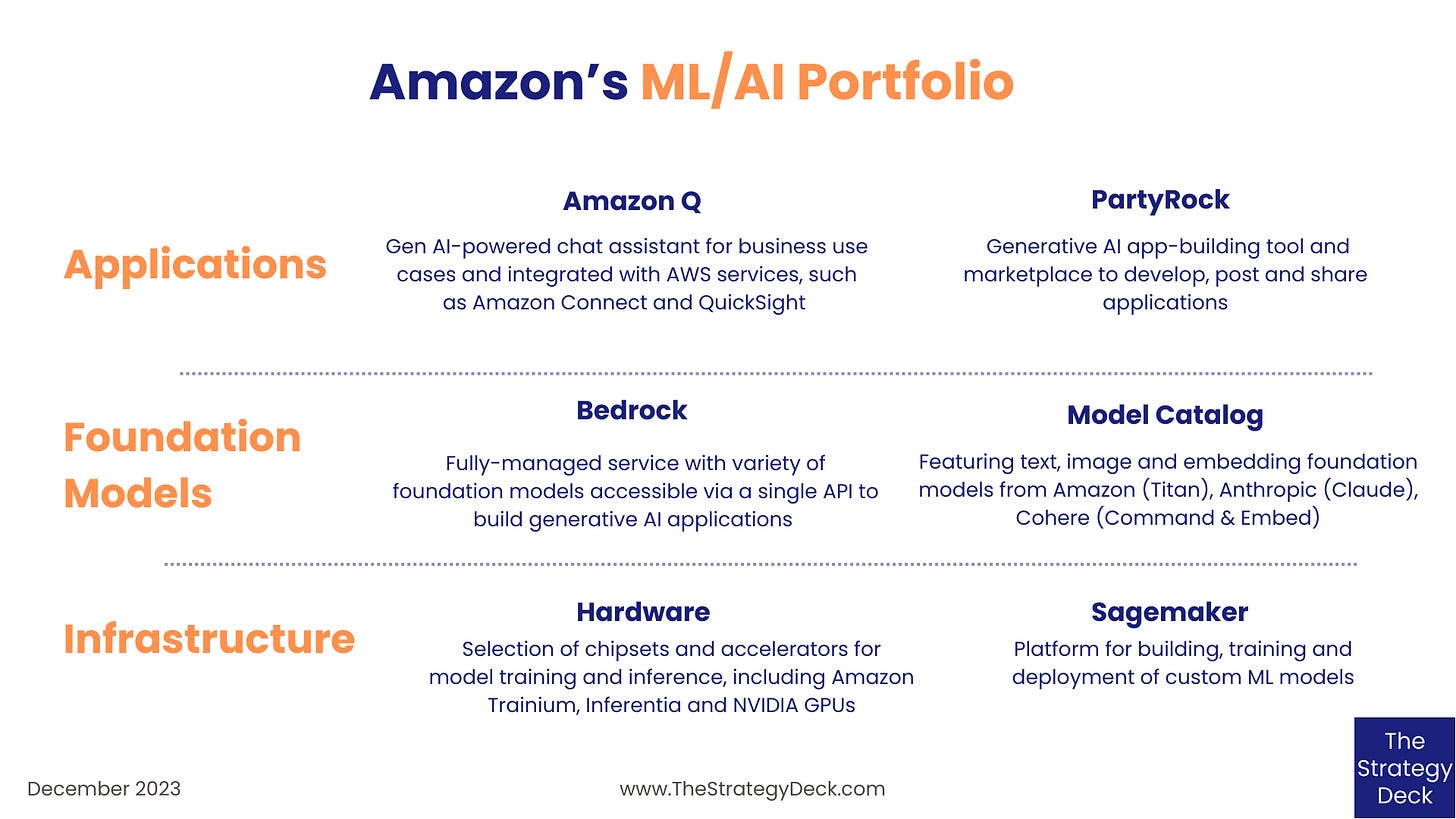

AWS is developing its Machine Learning products across 3 layers:

Business applications and features for AWS Services that leverage generative AI

Development tools to build with foundation models

Hardware infrastructure and accelerators for training and inference

The theme that resonated throughout last week’s ML announcements was of choice: choice of hardware and chipsets, choice of foundation models and flexibility and customization options for the development of AI applications.

Amazon Q: Enterprise Chatbot and Gen AI Features for AWS Services

Amazon Q, currently in preview, is a generative AI powered enterprise chat assistant for business use cases such as question-answering, knowledge discovery, writing email messages, summarizing text, drafting document outlines, and brainstorming ideas. Available through the AWS developer console, Q enables the building of custom applications and workflows, including RAG features.

As an administrator, users can define the context for responses, restrict specific topics and instruct Q to respond only using company information or complement responses with knowledge from the underlying model. The tool provides fine-grained access controls that restrict responses to only using certain data or acting based on the employee’s level of access and can connect to over 40+ data sources, including Amazon S3, Google Drive, Microsoft SharePoint, Salesforce, ServiceNow and Slack.

Q is also available (in preview) through QuickSight to augment its business intelligence capabilities with the automatic creation of shareable insights and stories, executive summaries and natural language Q&A of the data.

And through Amazon Connect, Q is meant to speed up customer support tasks with recommended responses and actions based on better understanding of customer intent in real time, as well as post-contact summarization capabilities.

To explore and promote variety of use cases, AWS is also developing PartyRock, a code-free Generative AI-building tool and marketplace to create, post and share applications.

Bedrock: AI Application Development Service and Foundation Model Catalog

Amazon Bedrock is the fully managed service AWS provides to build generative AI applications on top of a variety of foundation models accessible via a single API. The tool allows users to experiment with and evaluate different models, privately customize them with data using techniques such as fine-tuning and Retrieval Augmented Generation (RAG), and build agents that execute tasks using various enterprise systems and data sources. Amazon Bedrock is serverless, so there is no infrastructure to manage.

The latest features include guardrails (currently in preview) to promote safe interactions and implement responsible AI policies, and Knowledge Bases, to connect foundation models to company data sources for Retrieval Augmented Generation applications.

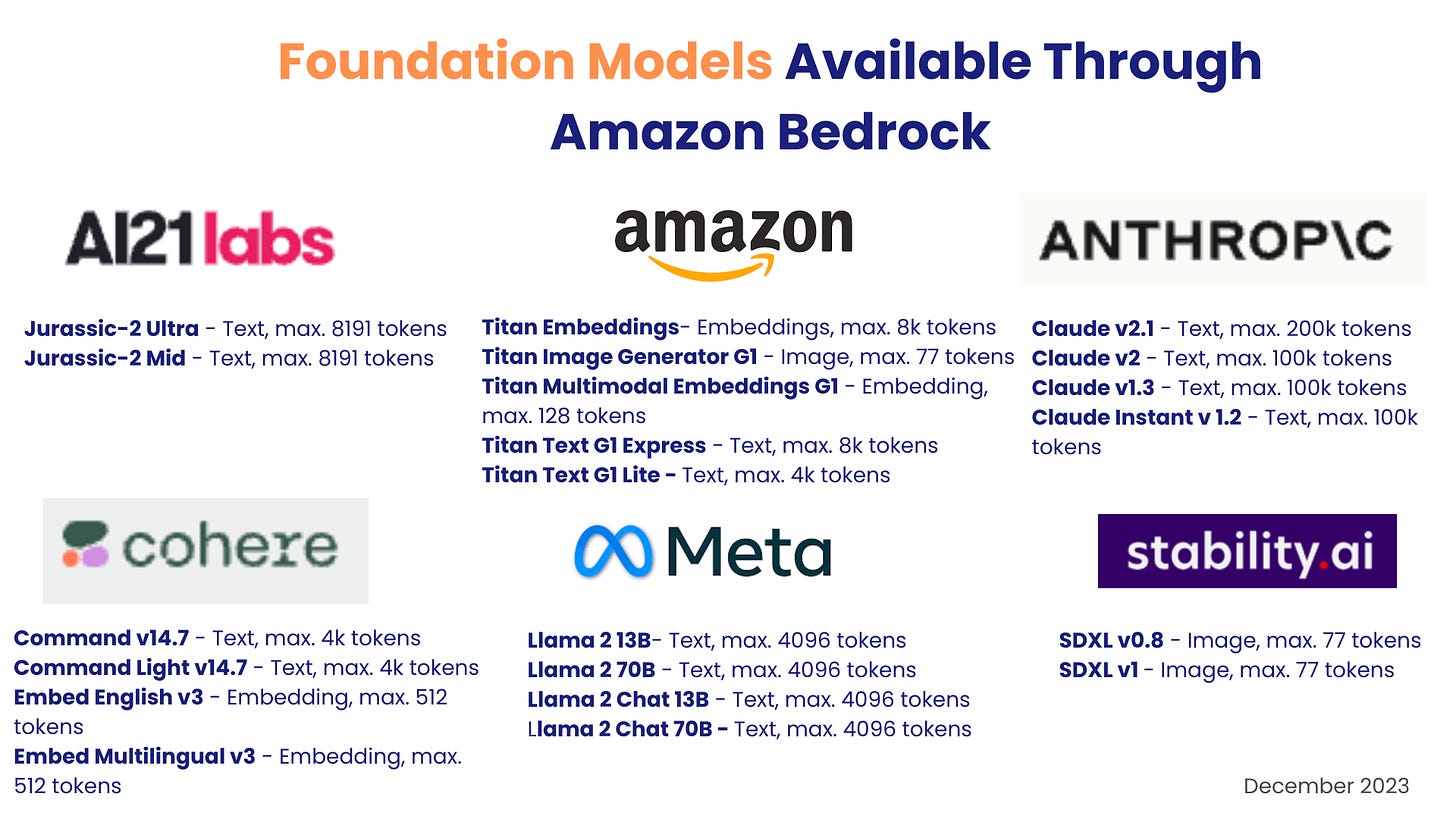

During the past six months, AWS has been building its foundation model catalog, with new additions announced last week. Accessible through Amazon Bedrock, AI application developers can use pre-trained models from seven different families: Amazon’s Titan, Anthropic’s Claude, Cohere’s Command and Embed, AI21Labs’ Jurassic, Meta’s Llama and Stability AI’s Stable Diffusion.

Amazon’s Titan family of models includes text, image and embedding versions, with more surely to come.

Hardware Infrastructure: Chipsets and SageMaker

At the infrastructure level, AWS is providing a choice of chipsets and accelerators for model training and inference that include Amazon’s own Trainium and Inferentia, as well as those from partners, such as NVIDIA’s GPUs.

Additionally, SageMaker, the end-to-end platform for the building, training and deployment of custom models, enables access to a variety of IDEs and tooling, so that it can be used by ML engineers, data scientists and business analysts alike. Besides integration with JupyterLab and RStudio, most recently, the SageMaker Studio was updated with an improved web interface and a Code Editor based on Code-OSS to provide even more interface choice.

Choice and Flexibility as the Differentiation Vector

From Amazon Q to PartyRock, Bedrock and SageMaker, Amazon is investing in ML development tools across the tech stack with the customer value proposition of choice and flexibility as its main differentiation vector and a secondary proposition of integration with the rest of the AWS stack and services.

Will that be enough to capture the market opportunity?

Related posts from The Strategy Deck: