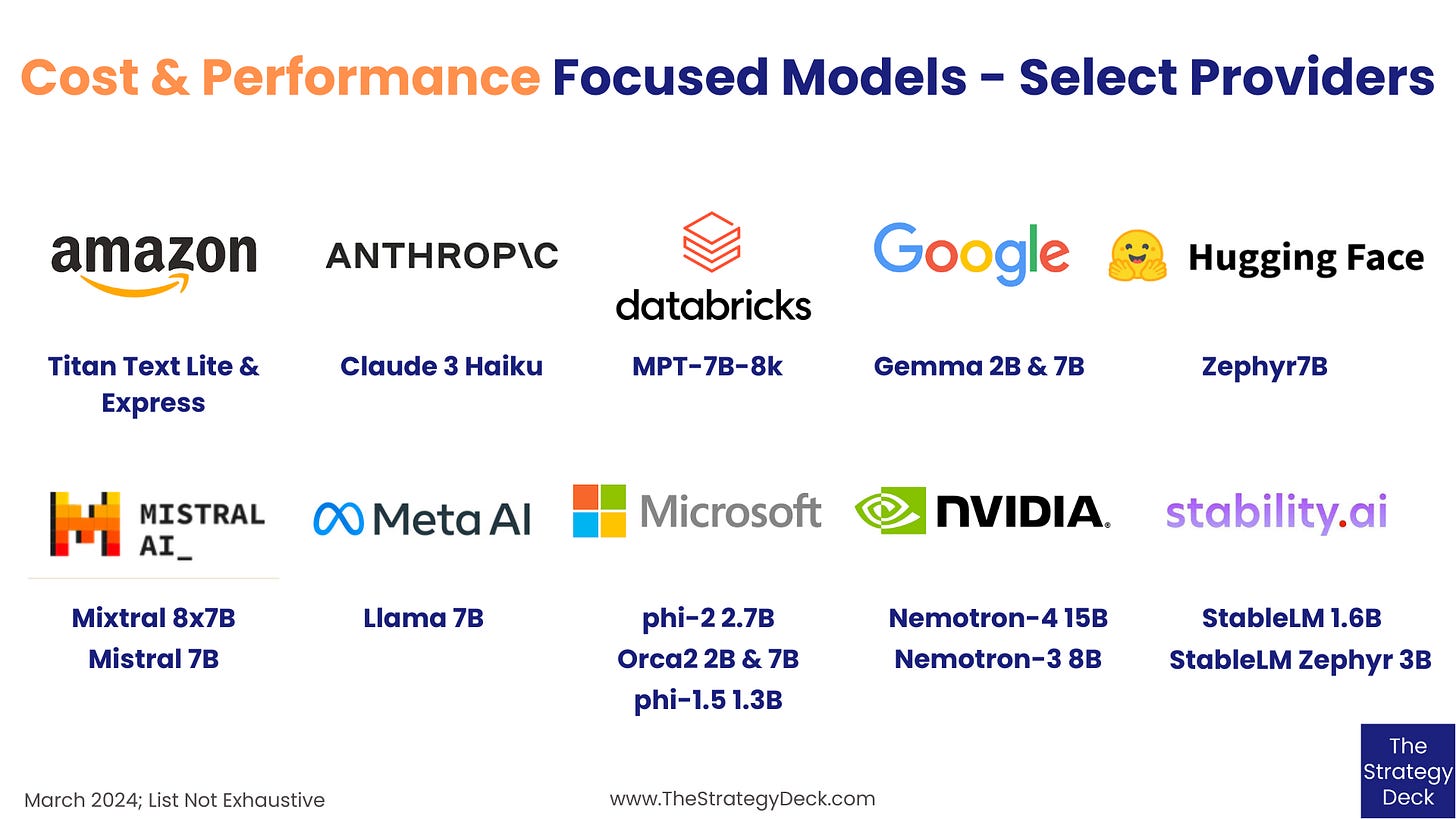

Cost and Performance - Focused Models: Updates and Releases

Models to Round Out the Collection, Including Recent Releases and More Open Source Options

This post adds recently released, as well as more open source models to the collection on cost and performance, to provide an even more comprehensive view into this AI research direction.

Last week, Anthropic announced the Claude 3 model family, which includes Haiku, its most compact and affordable model, optimized for responsiveness. The company claims the model can “read an information and data dense research paper on arXiv (~10k tokens) with charts and graphs in less than three seconds.”

With a 200k context window, multimodal capabilities for image, video and text, Haiku is meant to support use cases where speedy responses are important, such as customer support, content moderation and logistics applications.

Not yet released, Haiku will be available through the Anthropic API and is priced at US$0.25 / M tokens for input, and US$1.25 / M tokens for output. Its technical report and the Constitutional AI safety principles employed by Anthropic to train all of its models are publicly available.

Anthropic’s previous cost and performance focused model is Claude Instant 1.2, which was released in August 2023.

Late last month, NVIDIA researchers published the technical report for Nemotron-4 15B, its newest, not yet released, foundation model. It’s based on a decoder-only transformer architecture, optimized with Rotary Position embeddings and a SentencePiece tokenizer, trained on 8 trillion text tokens. It has support for 54 natural languages and 43 programming ones.

According to the publishers “it outperforms all existing similarly-sized open models on 4 out of 7 downstream evaluation areas and achieves competitive performance to the leading open models in the remaining ones. Specifically, Nemotron-4 15B exhibits the best multilingual capabilities of all similarly-sized models, even outperforming models over four times larger and those explicitly specialized for multilingual tasks.”

Its predecessor, the Nemotron-3 8B series, has been available since November 2023 under the NVIDIA AI Foundation Models Community License Agreement and it features a Base, 3 Chat and 1 Q&A versions.

Released in December 2023, Stable LM Zephyr 3B from Stability AI is a fine-tuned version of StableLM 3B, trained similarly to Hugging Face’s Zephyr 7B beta model. It was created to perform well in text generation and instruction following and is available under the Stability AI Non-Commercial Research Community License Agreement through Hugging Face.

The 7B version of the Llama-2 series from Meta was launched in July 2023, along with the larger 13B and 70B. With a context window of 4k and text-only support, it is built on an encoder-decoder architecture. Released under the Llama 2 Community License and accompanied by the “Llama 2: Open Foundation and Fine-Tuned Chat models” technical report, it is available through the Meta AI website and Hugging Face.

Tiny Llama is a 1.1B parameter model developed by the StatNLP Research Group at the Singapore University of Technology and Design. It has been trained on 3 trillion tokens between August and December 2023 using the same architecture and tokenizer as Llama 2.

Licensed under Apache 2.0, the model, along with intermediate checkpoints at 1, 1.5, 2, and 2.5 trillion tokens, can be accessed on GitHub and Hugging Face. Its technical report is titled “TinyLlama: An Open-Source Small Language Model”.

Zephyr 7B, released in October 2023, is a series created by Hugging Face in order to improve task accuracy and alignment for small models. Fine-tuned from Mistral 7B using Direct Preference Optimization, Zephyr 7B is available under an MIT license through GitHub and Hugging Face. Its technical report is titled “Direct Distillation of LM Alignment.”

The other models in this collection are listed below. For details and links, check out my previous post on cost and performance-focused foundation models.

Gemma 2B and 7B from Google

Stable LM 2 1.6B from Stability AI

Mixtral 8x7B and 7B from Mistral AI

Phi-2 2.7B, Phi 1.5 1.3B and Orca2 7B from Microsoft

Titan Text Lite and Express from Amazon

MPT-7B-8k from Databricks