Growth Vectors in Foundation Models: General Purpose LLMs

Featuring 13 Models from 10 Providers, Including Cohere, Stability AI, Mistral, Fireworks AI

Personal note: I have availability coming up and am looking for a full-time or contract role. Besides doing AI market analysis, I am also an accomplished Corporate Strategy and Technical Product Manager, with over 15 years of experience building products and features, and developing and executing cross-functional initiatives in consumer tech, browsers and web technologies. I am looking for open roles and opportunities in:

Product Management

Corporate Strategy

Planning / Operations

If you or a friend are looking for a seasoned, well-rounded Manager for Product, Strategy or Operations, reply to this email, to alex [at] thestrategydeck [dot] com or on Linkedin.

Back to regular scheduling…

Foundation models greatly influence the characteristics of AI applications built on top of them, so making informed decisions about which ones to deploy will significantly impact performance and usability for your use case. Each choice involves trade-offs, but having a wide variety of options to select from is crucial, whether you directly license a model through an API or deploy an open-source one on your own infrastructure.

In the past 12 months, many announcements of new releases and updates have been made by both established tech companies and start-ups, each offering their own optimizations and value propositions.

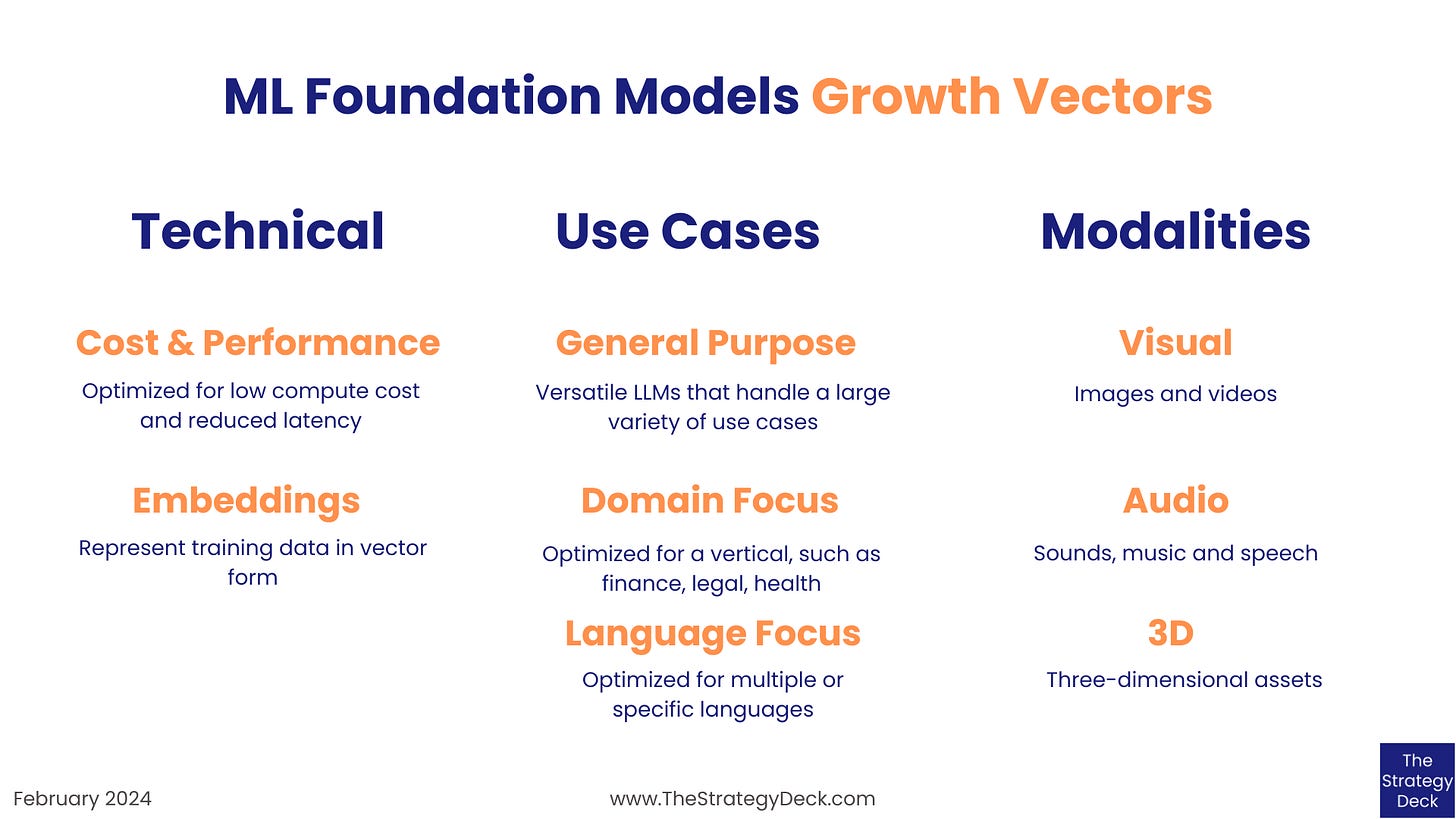

This is Part 2 in the Foundation Model Growth Vectors series, which focuses on the main areas of investment and innovation in the space. Part 1 analyzes companies and products focused on performance and cost, as well as embeddings, and now we turn our focus to a survey of General Purpose LLMs.

This collection focuses on 13 representative LLMs from 10 providers that are publicly available and were released in 2023 and 2024. It includes both open source and proprietary ones, featured below in reverse chronological order.

Released this month, February 2024, Mistral Large is a model with a 32k context length optimized for reasoning on high-complexity tasks. Available under a proprietary license on the Mistral AI platform and the Azure AI studio through the Model-as-a-Service business model, Mistral Large supports English, French, Italian, German, Spanish and code.

Also this month, Google made available the first of the Gemini series, featuring the Pro and Pro Vision models, as well as embeddings and retrieval ones. With a 32k context length and a maximum size of 4MB for prompts that include images, Gemini has a decoder-only transformer architecture and is available through the Google Vertex AI Studio.

Command, from Cohere, is another series of General Purpose models, featuring a Base and a Light version. With a 4k context length, it is optimized for summarization and chat and it is updated continuously. You can try it through the API on the Cohere platform.

In January 2024, AI development platform provider Fireworks AI released Fire LLaVA, a 13B parameter LLaVA vision-language model based on a vision encoder and the open source, Llama-based Vicuna model. The model has been instruction tuned using GPT-4 generated multimodal language-image instruction-following data, as described in the technical reports on LLava models, “Visual Instruction Tuning” and “Improved Baselines with Visual Instruction Tuning”. Fire LLaVA is available through the Fireworks.ai API and on Hugging Face under the Llama 2 Community License.

In August 2023, the open research organization called The Large Model Systems Organization (LMSYS Org), released Vicuna 1.5, a fine-tuned version of the Llama-2 13B model. Featuring a 2k context length, the model is available under the Apache 2.0 license on GitHub.

In July last year, Stability AI released Stable Beluga 1 and 2 under Creative Commons licenses. Version 1 is a 65B parameter model based on Llama-1, trained using synthetic data and Supervised Fine-Tune. Version 2 was created from Llama-2 70B. Both models are available on Hugging Face - v1 and v2.

Llama-2, released by Meta on July 18, 2023, is available in three versions: 7B, 13B, and 70B. The model is divided into two main types: Llama 2 and Llama 2 Chat, both characterized by their 4k context length and text-only format. The architecture of Llama-2 is built around an optimized Encoder-Decoder Transformer, enhanced with rotary positional embeddings and root-mean-squared normalizationm, as described in its technical report and model card. It is released under the Llama 2 Community License and is available through Meta AI and Hugging Face.

Claude 2, developed and released by Anthropic, comes in two versions: v2, released on July 11, 2023, and v2.1, which followed on November 21, 2023. v2 offers a 100k context window, while v2.1 extends this capability to 200k. The architecture of both versions is based on the Transformer model, trained through a combination of unsupervised learning, Reinforcement Learning from Human Feedback (RLHF), and principles of Constitutional AI. Claude 2 is available under a proprietary license through the Anthropic API and its technical report is here.

PaLM 2, launched by Google on May 10, 2023, is available in two versions: Bison, which is tailored for text and chat applications, and Gecko, which is optimized for embeddings. PaLM 2 is optimized for advanced reasoning, translation, and code generation and is available through the PaLM API in the Vertex AI model catalog. Its technical report is here.

GPT-4, the latest iteration of OpenAI's generative pre-trained transformer models, was released on March 14, 2023. The Base version is available, while the Turbo and Vision versions are currently in preview. The Base model of GPT-4 is available in two configurations: one with an 8k and the other with a 13k context length, both incorporating training data up until September 2021. The Turbo and Vision versions, along with the updated Base model – all in preview – have an extended 128k context length and can produce a maximum of 4,096 output tokens. These versions are trained with data up to April 2023. GPT-4 comes under a proprietary license and is accessible through the OpenAI API gpt-4 endpoint. The GPT-4 technical report is here and the system card here.

GPT-3.5 Turbo, was released on March 1, 2023, and is available in two versions: Base and Instruct. The Base version offers two configurations for context length: 4k and 16k and incorporate training data up to September 2021. Available under a proprietary license, GPT-3.5 Turbo is accessible through the OpenAI API gpt-3.5-turbo endpoint. Technical evaluations of this model series can be found in the “A Comprehensive Capability Analysis of GPT-3 and GPT-3.5 Series Models” and “GPT-3.5, GPT-4, or BARD? Evaluating LLMs Reasoning Ability in Zero-Shot Setting and Performance Boosting Through Prompts” papers.

Jurassic-2, released by AI21 Labs on March 9, 2023, is offered in three versions: Ultra, Mid, and Light. With an 8k context length, the model has multilingual capabilities, supporting a variety of languages including English, Spanish, French, German, Portuguese, Italian, and Dutch. Jurassic-2 is governed by a proprietary license from AI21Labs and is available through the AI21 Studio.

Llama-1, introduced by Meta on February 24, 2023, is available in four versions: 7B, 13B, 33B, and 65B. It features a 2k context length and is distributed under an open Custom Commercial License. Its architecture is described in the “LLaMA: Open and Efficient Foundation Language Models” and model card.

The ML foundation model space is a vibrant and competitive market, driven by the need to cater to a wide range of product requirements, from high-complexity reasoning tasks to specialized embeddings and retrieval functionalities.

The trend towards models with extended context lengths, such as the 32k offered by Mistral Large and the Gemini series, indicates a market shift towards more sophisticated and nuanced AI applications. These advancements suggest that future AI tools will not only understand larger chunks of information but also provide more accurate and contextually relevant responses.

I’m looking forward to see how this space evolves, as its full potential is still to be realized.