Small Foundation Models - Technical Advances and Value Drivers

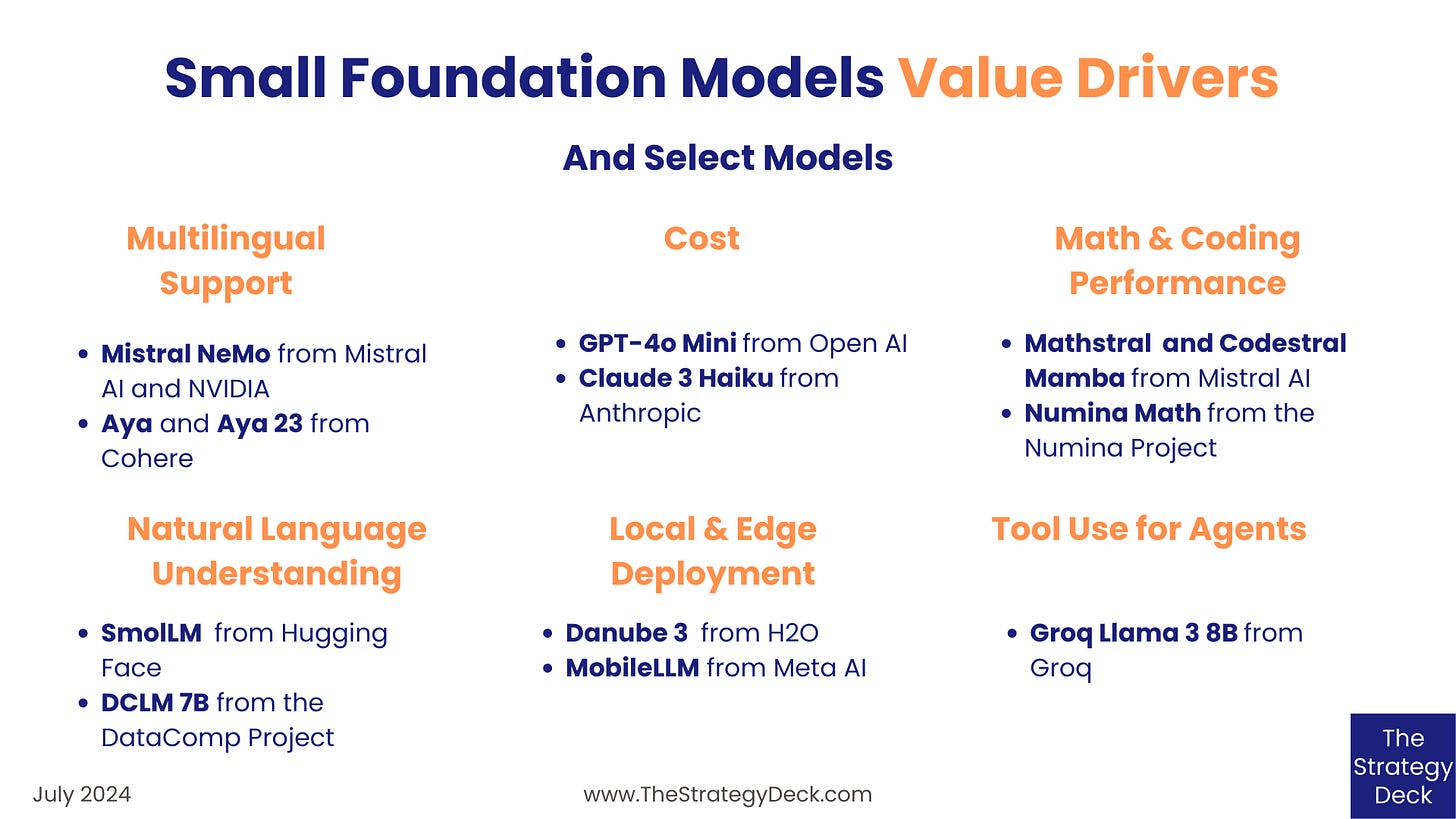

Driving This Segment Are Multilingual Support, Performance in Math, Coding and Natural Language Understanding, Deployment on Local and Edge Devices and Tool Use for Agent Implementations

In the past few weeks we’ve seen an impressive amount of releases of small foundation models that allow for lower cost and lower latency deployment. This segment includes both open source and proprietary, API access-only models, encompassing General Purpose versions as well as ones focused on specific use cases such as coding, math, and natural language understanding.

This is a look at the technical advances and value drivers in this segment, focused on the latest releases. Read below about:

Improved multilingual support in General Purpose models, such as Mistral NeMo and Cohere’s Aya series

OpenAI Proprietary Model Pricing Lowered

Training performant models with high-quality, textbook-like data, such as SmolLM from Hugging Face and DCLM 7B from the DCLM project

Improved efficiency for math and coding use cases, through Mistral’s Mathstral and Codestral Mamba, as well as the NuminaMath 7B

New models optimized for local, offline and edge device deployment, featuring the Danube 3 series from H2O and MobileLLM from Meta AI

Tool use and function calling capabilities for deployment in agent systems, featuring Groq Llama 3 8B

Keep reading with a 7-day free trial

Subscribe to The Strategy Deck to keep reading this post and get 7 days of free access to the full post archives.