Domain-Focused Models: Code LLMs

Models and Opportunities for Programming Tasks in Python, C++, C#, Java, JavaScript, R, Rust, etc.

Building a coding AI assistant (like GitHub’s Copilot) or an software engineer agent (like Devin)? Here is a deep dive into LLMs that have been developed for coding tasks and market opportunities in the space. It features 10 series from 7 companies, as well as university research groups across the world.

Read below about:

StarCoder 2 from the Big Code Project (led by Hugging Face and ServiceNow)

Code Llama from Meta AI

DeepSeek-Coder from DeepSeek AI

StableCode 3B from Stability AI

WizardCoder from Microsoft and Hong Kong Baptist University

Magicoder from researchers at the University of Illinois at Urbana-Champaign and Tsinghua University

CodeGen 2.5 from Salesforce AI Research

Phi-1 1.3B from Microsoft

CodeT5+ from Salesforce AI Research

SantaCoder from the Big Code Project

This is part 4 of the series on growth vectors in the AI space, looking at domain-specific models, in this case coding. Other areas with dedicated models include medicine, chemistry and biology, law, finance, math and weather, which will be featured in upcoming articles.

Part 1 and part 3 in the series are about cost and performance-focused LLMs and part 2 is about General Purpose ones.

Coding LLMs

StarCoder 2 was released on February 29, 2024, as the successor to Star Coder, which was launched on May 4, 2023. Developed by The Big Code Project, an open scientific collaboration led by Hugging Face and ServiceNow, it includes 3B, 7B, and 15B versions. The training occurred over 3T tokens for the smaller models and more than 4T tokens for the 15B one and it used The Stack v2 dataset, a collection of over 600 programming languages, along with natural language texts from sources such as Wikipedia, Arxiv, and GitHub issues. The model uses Grouped Query Attention and has a 16k token context window.

In terms of performance in Python coding, the 15B model scores 46.3 on HumanEval and 66.2 on MBPP. And for other programming languages it achieves 41.4 in C++, 29.2 in C#, 33.9 in Java, 44.2 in JavaScript, 19.8 in R, and 38 in Rust. Its technical paper is titled StarCoder 2 and The Stack v2: The Next Generation, and the model is available on Hugging Face and GitHub, under the BigCode Open RAIL-M v1 license.

Code Llama was launched with three initial versions – 7B, 13B, and 34B, all of which were released on August 24, 2023, followed by a more extensive 70B version published on January 29, 2024.

Each version of Code Llama is available in three different configurations: a base model, a Python-specialized version, and one fine-tuned for understanding natural language instructions. The training for the three smaller versions was done over 500B tokens of code and code-related data and for the 70B version was over 1T tokens.

With a 100k context window, it supports multiple programming languages, including Python, C++, Java, PHP, TypeScript, C#, and Bash. The 70B version scores 67.8 on HumanEval, 62.2 on MBP, and 45.9 on Multilingual HumanEval.

Code Llama is freely available for both commercial and research purposes under the Llama 2 Community License and its architecture is further described in its technical report titled Code Llama: Open Foundation Models for Code

DeepSeek-Coder was launched on January 25, 2024, by DeepSeekAI in collaboration with researchers from Peking University. It includes versions of 1.3B, 5.7B, 6.7B, and 33B, with both Base and Instruct versions.

The model's training involved 2T tokens from 87 programming languages, comprising 87% source code, 10% code-related natural language text, and 3% code-unrelated Chinese natural language content. Its maximum context window is 64k, with most reliable outputs in the 16k range.

On benchmarks, the 33B version achieves scores of 79.3 for Python, 68.9 for C++, 73.4 for Java, 74.1 for C#, and 73.9 for JavaScript. Its BMPP score stands at 70.

The technical report for DeepSeek-Coder is titled When the Large Language Model Meets Programming - The Rise of Code Intelligence and the model is available on Hugging Face and GitHub under an open license.

StableCode 3B, developed by Stability AI, was released on January 16, 2024. This decoder-only model is pre-trained on a 1.3T token dataset, which includes a blend of text and code across 18 programming languages.

StableCode 3B’s benchmark scores include 32.4 for Python, 30.9 for C++, 32.1 for Javascript, 32.1 for Java, and 23 for Rust.

The model is available on Hugging Face under the StabilityAI Non-Commercial Research Community License.

WizardCoder is a series of code LLMs developed by researchers from Microsoft and Hong Kong Baptist University by fine-tuning models using the Evol-Instruct method. The latest version is a 33B model based on DeepSeek-Coder and released on January 4, 2024. The version before it is a 15B model based on StarCoder, published on June 14, 2023.

The larger version scores 79.9 on HumanEval, 73.2 on HumanEval+, 78.9 on MBPP, and 66.9 on MBPP+ and the 15B one 57.3 on HumanEval and 51.8 on MBPP.

The WizardCoder series is available on Hugging Face and its technical report is titled WizardCoder: Empowering Code Large Language Models with Evol-Instruct.

Magicoder was released on December 4, 2023, by researchers at the University of Illinois at Urbana-Champaign and Tsinghua University. It was developed by fine-tuning Code Llama 7

B and DeepSeek-Coder 6.7B through the OSS-INSTRUCT method.

The MagicoderCL version (based on Code Llama) achieves a HumanEval+ score of 55, while its instruction-tuned counterpart, MagicoderS-CL, scores 66.5. Additionally, in the BMPP+ benchmark, MagicoderCL scores 64.2 and the S version 56.5. Its MultiPL-E scores range from 40.3 in Rust, 42.9 in Java, 44.4 in C++, and 57.5 in JavaScript.

The model is available on Hugging Face and GitHub under an open MIT license and its technical report is titled Magicoder: Source Code Is All You Need.

CodeGen 2.5 7B, from Salesforce Research, was released in July 2023 as an updated version of CodeGen 2.

Trained sequentially on the The Pile, BigQuery, and BigPython datasets, the model scores 36.2 on HumanEval and is available on Hugging Face under an open Apache 2.0 license

The technical reports for this model series are CodeGen 2.5: Small, but Mighty, CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis and CodeGen2: Lessons for Training LLMs on Programming and Natural Languages

Phi-1 1.3B from Microsoft Research was released on June 20, 2023. Optimized for basic Python coding, the model was trained over four days on eight A100 GPUs over 6Btokens of textbook-quality content sourced from the web, 1B tokens of synthetically generated textbooks and exercises, and further fine-tuned on 200M tokens of code extracted from The Stack v1.2 dataset.

Phi-1 achieves a HumanEval score of 50.6 and an MBPP score of 55.5 and is available on Hugging Face under an open MIT license. Its technical report is titled Textbooks Are All You Need.

Code T5+ series, released on May 13, 2023, by Salesforce AI Research, includes 220M, 770M, 2B, 6B, and 16B parameter versions.

The models were trained on the GitHub Code dataset3 extended with the CodeSearchNet dataset and covering nine programming languages: Python, Java, Ruby, JavaScript, Go, PHP, C, C++, and C#. The 16B version scores 35 on HumanEval.

The technical reports on T5+ and T5 are Open Code Large Language Models for Code Understanding and Generation and Code T5: Identifier-aware Unified Pre-Trained Encoder-Decoder Models for Code Understanding and Generation

The models are available on GitHub and Hugging Face.

SantaCoder is a 1.1B parameter, decoder-only, model developed by the Big Code Project and released on January 9, 2023. It was trained over 236B tokens using MultiQuery Attention and supports Python, Java and Javascript. With a 2k context window, it scores 37 on benchmarks for Java and Javascript, and 39 in Python.

Available on Hugging Face, SantaCoder can be used under the BigCode OpenRAIL-M v1 license. Its technical report is titled SantaCoder: Don’t Reach for the Stars!

Performance

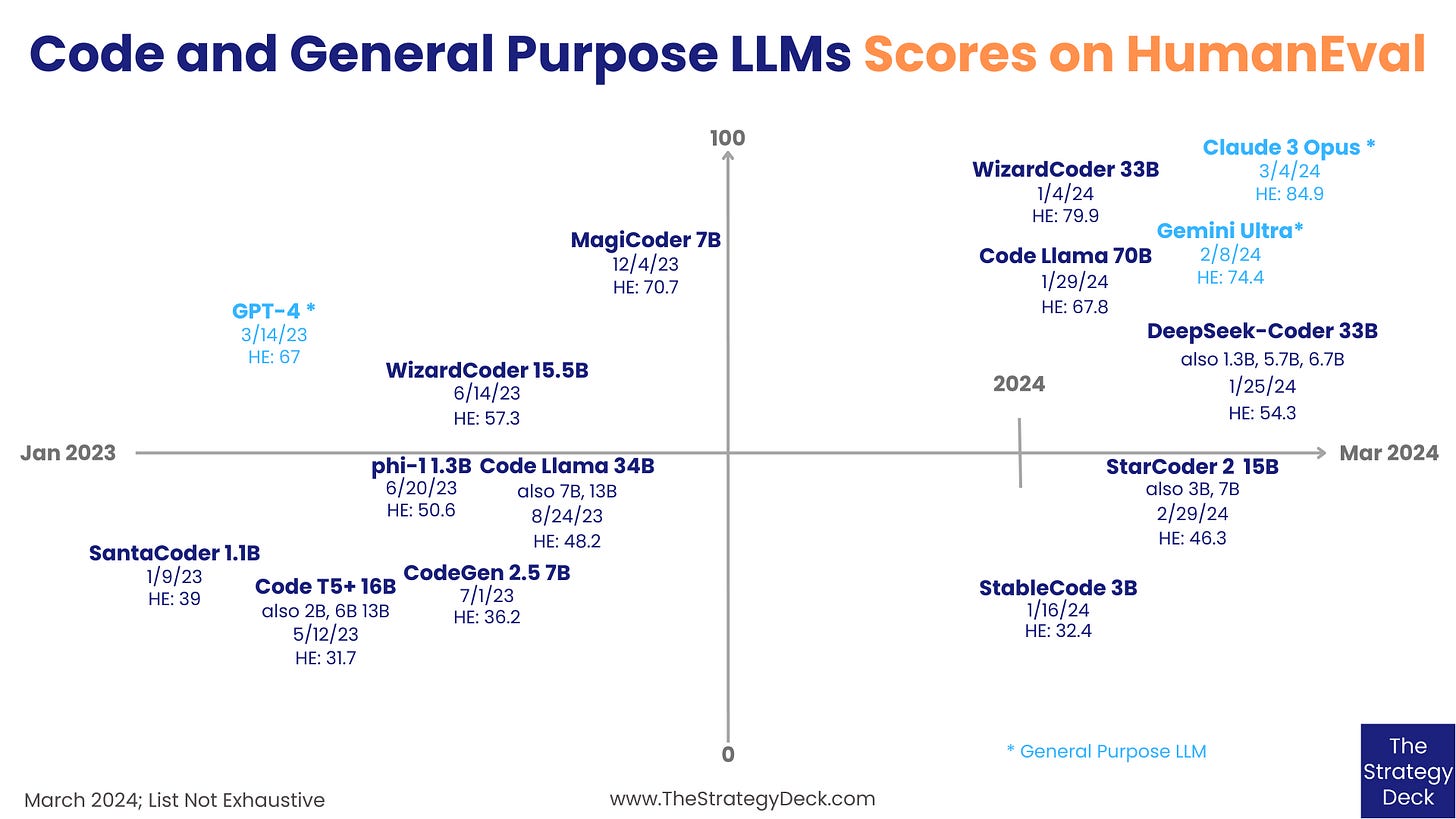

The performance of coding LLMs on HumanEval, a Python benchmark, is similar to that of the largest General Purpose LLMs - GPT-4, Gemini Ultra and Claude 3 Opus.

The performance of coding LLMs on other programming languages is generally lower than on Python and it varies a lot, with better scores on higher-resource languages, such as C++ and JavaScript, and lower results for lower-resource languages, including R and C#.

Opportunities

Code development and debugging is a great opportunity and value proposition for AI applications and there is a lot of room to provide even better solutions. Here are some of the opportunities available around coding LLMs and applications:

Improved support and performance for programming languages other than Python in order to cover the broad range of developer audiences and coding use cases

Support for natural language interaction in languages other than English to provide access to people all over the world

Creation of more advanced benchmarks that go beyond basic programming tasks and include more complex debugging and coding problems